Here are the steps. First, a created a folder that would hold both the objects under test as well as the unit tests.

After changing into the new unitTestsCSharp folder, I created the directory for the objects under test named ouut that stand for Objects Under Unit Tests.

From ouut I created a new project using dotnet new classlib command for the...well you guessed it...the code to be tested.

Then, back in the unitTestsCSharp directory I created a folder to hold the actual unit tests that is creatively entitled tests.

From the new tests folder I ran the dotnet new xunit command to create a new project for the unit tests.

I then opened the unitTestsCSharp folder in Visual Studio Code (VS Code). You may see dialog in VS Code informing you that some assets are missing for building and debugging and asking to add them. Select the Yes button.

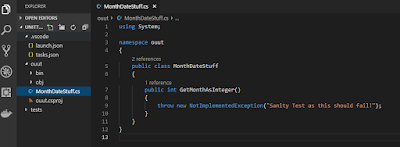

From the unitTestsCSharp/ouut folder I renamed Class1.cs to MonthDateStuff.cs as I wanted to do some basic Date/Time parsing. As many of you know, when using the test-driven development (TDD) methodology, you first create a failing implementation of the MonthDateStuff class.

using System;

namespace ouut

{

public class MonthDateStuff

{

public int GetMonthAsInteger()

{

throw new NotImplementedException("Sanity Test as this should fail!");

}

}

}

Now we can look at the tests. Let's add the MonthDateStuff class library as a dependency to the test project using the dotnet add reference command.

Next, I changed the UnitTest1.cs name to MonthDateStuffTests.cs, added the using ouut directive, and then added a ReturnIntegerGivenValidDate method for an initial test.

using System;

using Xunit;

using ouut;

namespace tests

{

public class MonthDateStuffTests

{

private ouut.MonthDateStuff _monthDateStuff;

public MonthDateStuffTests(){

_monthDateStuff = new MonthDateStuff();

}

[Fact]

public void ReturnIntegerGivenValidDate()

{

var result = _monthDateStuff.GetMonthAsInteger();

Assert.True(result.Equals(0));

}

}

}

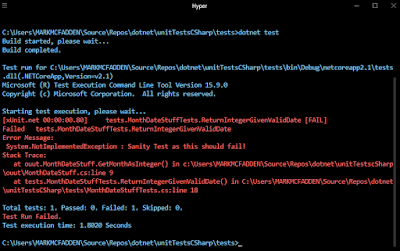

The [Fact] attribute lets the xUnit framework know that the ReturnIntegerGivenValidDate method is to be run by the test runner. From the tests folder I execute dotnet test to build the tests and the class library and then run the tests. The xUnit test runner contains the program entry point to run your tests. The dotnet test command starts the test runner using the unit test project. Here, for a sanity check, we want the test to fail to at least make sure all is setup properly.

Now, let's get the test to pass. Here is the code in the updated MonthDateStuff class.

using System;

namespace ouut

{

public class MonthDateStuff

{

public int GetMonthAsInteger()

{

var dateInput = "Jan 1, 2019";

var theDate = DateTime.Parse(dateInput);

return theDate.Month;

}

}

}

The test result.

Let's add more features. xUnit has other attributes that enable you to write a suite of similar tests. Here are a few:

[Theory] represents a suite of tests that execute the same code but have different input arguments.

[InlineData] attribute specifies values for those inputs.

Rather than creating several tests, use these two attributes to create a single theory. In this case, the theory is a method that tests several month integer values to validate that they are greater than zero and less than twelve:

[Theory]

[InlineData(1)]

[InlineData(2)]

[InlineData(3)]

public void ReturnTrueGivenValidMonthInteger(int monthInt)

{

Assert.True(_monthDateStuff.IsValidMonth(monthInt));

}

Note that there were 4 tests run as the theory ran three times.

Get more information about xUnit here .